Introduction

Valence is a unified development environment that enables building trust-minimized cross-chain DeFi applications, called Valence Programs.

Valence Programs are:

- Easy to understand and quick to deploy: a program can be set up with a configuration file and no code.

- Extensible: if we don't yet support a DeFi integration out of the box, new integrations can be written in a matter of hours!

Example Use Case:

A DeFi protocol wants to bridge tokens to another chain and deposit them into a vault. After a certain date, it wants to unwind the position. While the position is active, it may also want to delegate the right to change vault parameters to a designated committee so long as the parameters are within a certain range. Without Valence Programs, the protocol would have two choices:

- Give the tokens to a multisig to execute actions on the protocol's behalf

- Write custom smart contracts and deploy them across multiple chains to handle the cross-chain token operations.

Valence Programs offer the DeFi protocol a third choice: rapidly configure and deploy a secure solution that meets its needs without trusting a multisig or writing complex smart contracts.

Valence Programs

There are two ways to execute Valence Programs.

-

On-chain Execution: Valence currently supports CosmWasm and EVM. SVM support coming soon. The rest of this section provides a high-level breakdown of the components that comprise a Valence Program using on-chain coprocessors.

-

Off-chain Execution via ZK Coprocessor: Early specifications for the Valence ZK System. We aim to move as much computation off-chain as possible since off-chain computation is a more scalable approach to building a cross-chain execution environment.

Domains

A domain is an environment in which the components that form a program (more on these later) can be instantiated (deployed).

Domains are defined by three properties:

- The chain: the blockchain's name e.g. Neutron, Osmosis, Ethereum mainnet.

- The execution environment: the environment under which programs (typically smart contracts) can be executed on that particular chain e.g. CosmWasm, EVM, SVM.

- The type of bridge used from the main domain to other domains e.g. Polytone over IBC, Hyperlane.

Within a particular ecosystem of blockchains (e.g. Cosmos), the Valence Protocol usually defines one specific domain as the main domain, on which some supporting infrastructure components are deployed. Think of it as the home base supporting the execution and operations of Valence Programs. This will be further clarified in the Authorizations & Processors section.

Below is a simplified representation of a program transferring tokens from a given input account on the Neutron domain, a CosmWasm-enabled smart contract platform secured by the Cosmos Hub, to a specified output account on the Osmosis domain, a well-known DeFi platform in the Cosmos ecosystem.

---

title: Valence Cross-Domain Program

---

graph LR

IA((Input

Account))

OA((Output

Account))

subgraph Neutron

IA

end

subgraph Osmosis

IA -- Transfer tokens --> OA

end

Valence Accounts

Valence Programs usually perform operations on tokens accross multiple domains. To ensure that the funds remain safe throughout a program's execution, Valence Programs rely on a primitive called Valence Accounts. Additionally, Valence Accounts can also be used to store data that is not directly related to tokens.

In this section we will introduce all the different types of Valence Accounts and explain their purpose.

Base Accounts

A Valence Base Account is an escrow contract that can hold balances for various supported token types (e.g., in Cosmos ics-20 or cw-20) and ensure that only a restricted set of operations can be performed on the held tokens.

Valence Base Accounts are created (i.e., instantiated) on a specific domain and bound to a specific Valence Program. Valence Programs will typically use multiple accounts during the program's lifecycle for different purposes. Valence Base Accounts are generic by nature; their use in forming a program is entirely up to the program's creator.

Using a simple token swap program as an example: the program receives an amount of Token A in an input account and will swap these Token A for Token B using a DEX on the same domain (e.g., Neutron). After the swap operation, the received amount of Token B will be temporarily held in a transfer account before being transfered to a final output account on another domain (e.g., Osmosis).

For this, the program will create the following accounts:

- A Valence Base Account is created on the Neutron domain to act as the Input account.

- A Valence Base Account is created on the Neutron domain to act as the Transfer account.

- A Valence Base Account is created on the Osmosis domain to act as the Output account.

---

title: Valence Token Swap Program

---

graph LR

IA((Input

Account))

TA((Transfer

Account))

OA((Output

Account))

DEX

subgraph Neutron

IA -- Swap Token A --> DEX

DEX -- Token B --> TA

end

subgraph Osmosis

TA -- Transfer token B --> OA

end

Note: this is a simplified representation.

Valence Base Accounts do not perform any operation by themselves on the held funds, the operations are performed by Valence Libraries.

Valence Storage Account

The Valence Storage Account is a type of Valence account that can store Valence Type data objects.

Like all other accounts, Storage Accounts follow the same pattern of approving and revoking authorized libraries from being able to post Valence Types into a given account.

While regular Valence (Base) accounts are meant for storage of fungible tokens, Valence Storage accounts are meant for storage of non-fungible objects.

API

Execute Methods

Storage Account is a simple component exposing the following execute methods:

#![allow(unused)] fn main() { pub enum ExecuteMsg { // Add library to approved list (only admin) ApproveLibrary { library: String }, // Remove library from approved list (only admin) RemoveLibrary { library: String }, // stores the given `ValenceType` variant under storage key `key` StoreValenceType { key: String, variant: ValenceType }, } }

Library approval and removal follow the same implementation as that of the fund accounts.

StoreValenceType is the key method of this contract. It takes in a key of type String, and its

associated value of type ValenceType.

If StoreValenceType is called by the owner or an approved library, it will persist the key-value

mapping in its state. Storage here works in an overriding manner, meaning that posting data

for a key that already exists will override its previous value and act as an update method.

Query Methods

Once a given type has been posted into the storage account using StoreValenceType call, it becomes available

for querying.

Storage account exposes the following QueryMsg:

#![allow(unused)] fn main() { pub enum QueryMsg { // Get list of approved libraries #[returns(Vec<String>)] ListApprovedLibraries {}, // Get Valence type variant from storage #[returns(ValenceType)] QueryValenceType { key: String }, } }

Interchain Accounts

A Valence Interchain Account is a contract that creates an ICS-27 Interchain Account over IBC on a different domain. It will then send protobuf messages to the ICA over IBC for them to be executed remotely. It's specifically designed to interact with other chains in the Cosmos ecosystem, and more in particular with chains that don't support smart contracts. To use this account contract, the remote chain must have ICA host functionality enabled and should have an allowlist that includes the messages being executed.

Valence Interchain Accounts are created (i.e., instantiated) on Neutron and bound to a specific Valence Program. Valence Programs will typically use these accounts to trigger remote execution of messages on other domains.

As a simple example, consider a Valence Program that needs to bridge USDC from Cosmos to Ethereum via the Noble Chain. Noble doesn't support CosmWasm or any other execution environment, so the Valence Program will use a Valence Interchain Account to first, create an ICA on Noble, and then send a message to the ICA to interact with the corresponding native module on Noble with the funds previously sent to the ICA.

For this, the program will create a Valence Interchain Account on the Neutron domain to create an ICA on the Noble domain:

---

title: Valence Interchain Account

---

graph LR

subgraph Neutron

IA[Interchain Account]

end

subgraph Noble

OA[Cosmos ICA]

end

IA -- "MsgDepositForBurn

protobuf" --> OA

Valence Interchain Accounts do not perform any operation by themselves, the operations are performed by Valence Libraries.

API

Instantiation

Valence Interchain Accounts are instantiated with the following message:

#![allow(unused)] fn main() { pub struct InstantiateMsg { pub admin: String, // Initial owner of the contract pub approved_libraries: Vec<String>, pub remote_domain_information: RemoteDomainInfo, // Remote domain information required to register the ICA and send messages to it } pub struct RemoteDomainInfo { pub connection_id: String, pub ica_timeout_seconds: Uint64, // relative timeout in seconds after which the packet times out } }

In this message, the connection_id of the remote domain and the timeout for the ICA messages are specified.

Execute Methods

#![allow(unused)] fn main() { pub enum ExecuteMsg { ApproveLibrary { library: String }, // Add library to approved list (only admin) RemoveLibrary { library: String }, // Remove library from approved list (only admin) ExecuteMsg { msgs: Vec<CosmosMsg> }, // Execute a list of Cosmos messages, useful to retrieve funds that were sent here by the owner for example. ExecuteIcaMsg { msgs: Vec<AnyMsg> }, // Execute a protobuf message on the ICA RegisterIca {}, // Register the ICA on the remote chain } }

Library approval and removal follow the same implementation as that of the fund accounts.

ExecuteMsg works in the same way as for the base account.

ExecuteIcaMsg is a list of protobuf messages that will be sent to the ICA on the remote chain. Each message contains

the type_url and the protobuf encoded bytes to be delivered.

RegisterIca is a permissionless call that will register the ICA on the remote chain. This call requires the

Valence Interchain Account to not have another ICA created and open on the remote chain.

Query Methods

Interchain account exposes the following QueryMsg:

#![allow(unused)] fn main() { pub enum QueryMsg { #[returns(Vec<String>)] ListApprovedLibraries {}, // Get list of approved libraries #[returns(IcaState)] IcaState {}, // Get the state of the ICA #[returns(RemoteDomainInfo)] RemoteDomainInfo {}, // Get the remote domain information } pub enum IcaState { NotCreated, // Not created yet Closed, // Was created but closed, so creation should be retriggered InProgress, // Creation is in progress, waiting for confirmation Created(IcaInformation), } pub struct IcaInformation { pub address: String, pub port_id: String, pub controller_connection_id: String, } }

There are two specific queries for the Valence Interchain Account. The first one is IcaState which returns the state of the ICA. The second one is RemoteDomainInfo which returns the remote domain information that was provided during instantiation.

ICAs can only be registered if the IcaState is NotCreated or Closed.

Libraries and Functions

Valence Libraries contain the business logic that can be applied to the funds held by Valence Base Accounts. Most often, this logic is about performing operations on tokens, such as splitting, routing, or providing liquidity on a DEX. A Valence Base Account has to first approve (authorize) a Valence Library for it to perform operations on that account's balances. Valence Libraries expose Functions that it supports. Valence Programs can be composed of a more or less complex graph of Valence Base Accounts and Valence Libraries to form a more or less sophisticated cross-chain workflow. During the course of a Valence Program's execution, Functions are called by external parties that trigger the library's operations on the linked accounts.

A typical pattern for a Valence Library is to have one (or more) input account(s) and one (or more) output account(s). While many libraries implement this pattern, it is by no means a requirement.

Valence Libraries play a critical role in integrating Valence Programs with existing decentralized apps and services that can be found in many blockchain ecosystems (e.g., DEXes, liquid staking, etc.).

Now that we know accounts cannot perform any operations by themselves, we need to revisit the token swap program example (mentioned on the Base Accounts page) and bring Valence Libraries into the picture: the program receives an amount of Token A in an input account, and a Token Swap library exposes a swap function that, when called, will perform a swap operation of Token A held by the input account for Token B using a DEX on the same domain (e.g., Neutron), and transfer them to the transfer account. A Token Transfer library that exposes a transfer function will transfer the Token B amount (when the function is called) to a final output account on another domain (e.g. Osmosis). In this scenario, the DEX is an existing service found on the host domain (e.g. Astroport on Neutron), so it is not part of the Valence Protocol.

The program is then composed of the following accounts & libraries:

- A Valence Base Account is created on the Neutron domain to act as the input account.

- A Valence Base Account is created on the Neutron domain to act as the transfer account.

- A Token Swap Valence Library is created on the Neutron domain, authorized by the input account (to be able to act on the held Token A balance), and configured with the input account and transfer account as the respective input and output for the swap operation.

- A Token Transfer Valence Library is created on the Neutron domain, authorized by the transfer account (to be able to act on the held Token B balance), and configured with the transfer account and output account as the respective input and output for the swap operation.

- A Valence Base Account is created on the Osmosis domain to act as the output account.

--- title: Valence Token Swap Program --- graph LR FC[[Function call]] IA((Input Account)) TA((Transfer Account)) OA((Output Account)) TS((Token Swap Library)) TT((Token Transfer Library)) DEX subgraph Neutron FC -- 1/Swap --> TS TS -- Swap Token A --> IA IA -- Token A --> DEX DEX -- Token B --> TA FC -- 2/Transfer --> TT TT -- Transfer Token B --> TA end subgraph Osmosis TA -- Token B --> OA end

This example highlights the crucial role that Valence Libraries play for integrating Valence Programs with pre-existing decentralized apps and services.

However, one thing remains unclear in this example: how are Functions called? This is where Programs and Authorizations come into the picture.

Programs and Authorizations

A Valence program is an instance of the Valence protocol. It is a particular arrangement and configuration of accounts and libraries across multiple domains (e.g., a POL lending relationship between two parties). Similarly to how a library exposes executable functions, programs are associated with a set of executable subroutines.

A subroutine is a vector of functions. A subroutine can call out to one or more functions from a single library, or from different libraries. A subroutine is limited to one execution domain (i.e., subroutines cannot use functions from libraries instantiated on multiple domains).

A subroutine can be:

- non‑atomic (e.g., execute function one; if that succeeds, execute function two; then three; and so on)

- atomic (e.g., execute function one, two, and three; if any fail, revert all steps)

Valence programs are typically used to implement complex cross‑chain workflows that perform financial operations in a trust‑minimized way. Because multiple parties may be involved in a program, the parties may wish for limitations on what various parties are authorized to do.

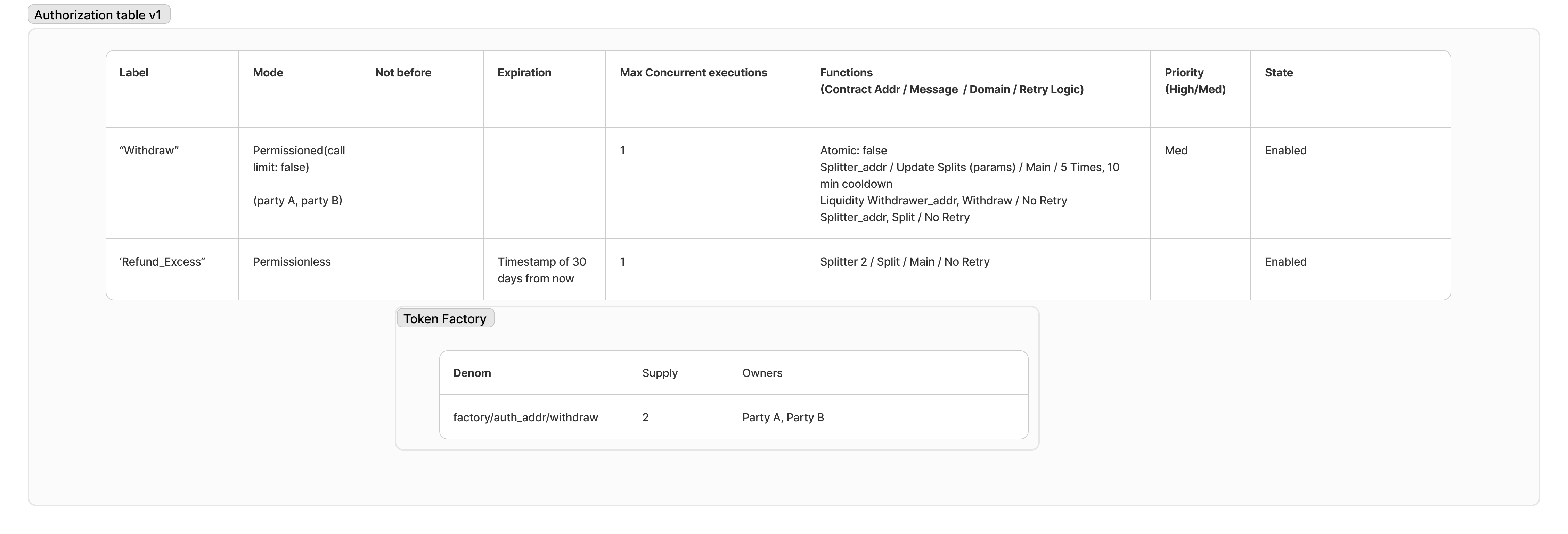

To specify fine‑grained controls over who can initiate the execution of a subroutine, program creators use the authorizations module.

The authorizations module supports access control configuration schemes such as:

| Authorization | Description |

|---|---|

| Open access | Anyone can initiate execution of a subroutine. |

| Permissioned access | Only permissioned actors can initiate execution of a subroutine. |

| Start time | Execution can only be initiated after a starting timestamp or block height. |

| End time | Execution can only be initiated up to a certain timestamp or block height. |

| Authorization model | CosmWasm: TokenFactory tokens (factory/{authorization_contract}/{label}). EVM: address‑based per label with contract/function constraints (no tokenization). |

| Expiration | Authorizations can expire. |

| Enable/disable | Authorizations can be enabled or disabled. |

| Parameter constraints | Authorizations can constrain parameters (e.g., limit to amount only, not denom or receiver). |

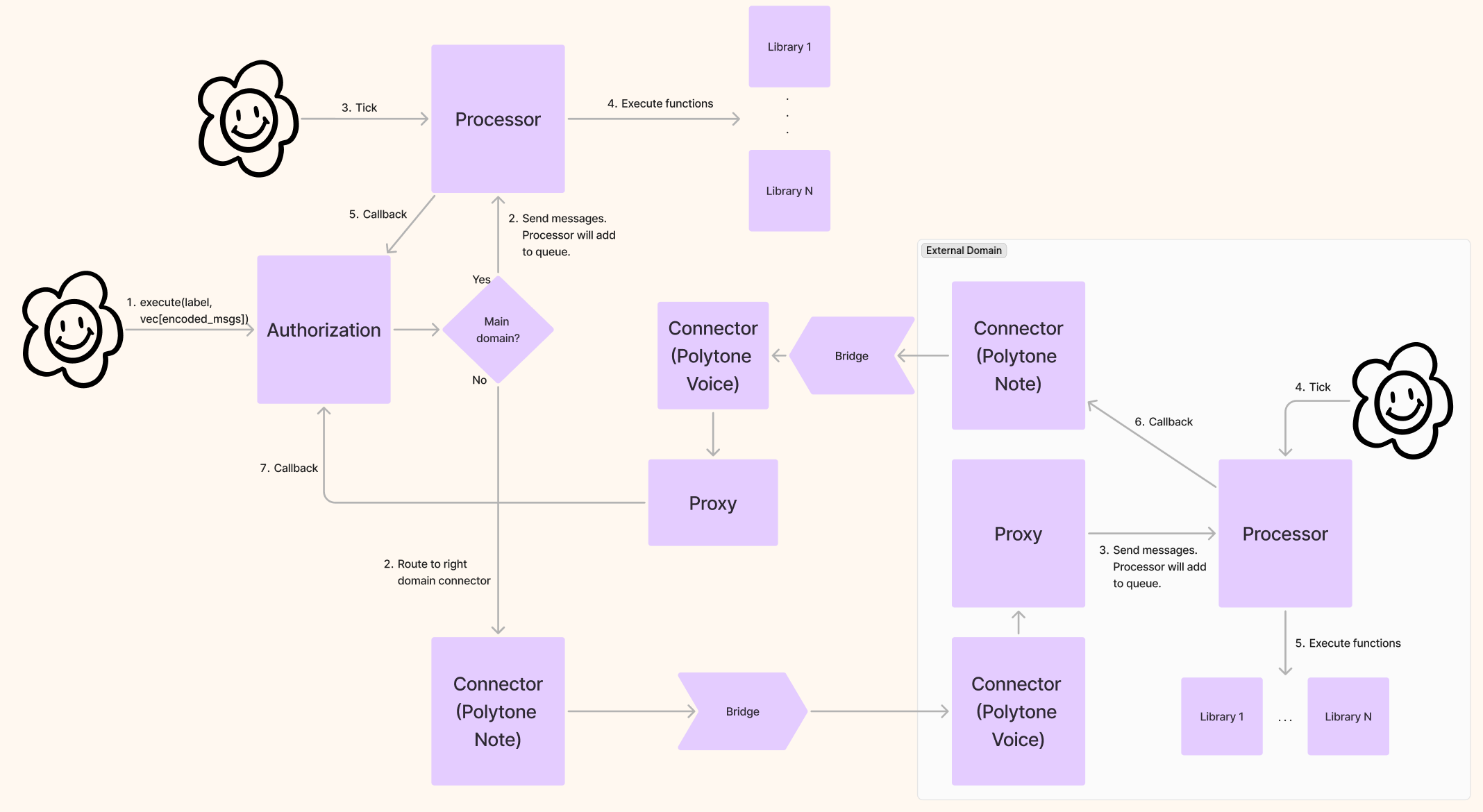

To support on‑chain execution, the protocol provides two contracts: the Authorization contract and the Processor contract.

The Authorization contract is the entry point for users. The user sends a set of messages to the Authorization contract and the label (id) of the authorization they want to execute. The Authorization contract verifies the sender and the messages, constructs a message batch based on the subroutine, and passes this batch to the Processor for execution.

- CosmWasm: permissioned workflows are enforced via TokenFactory‑minted per‑label tokens (burn/refund semantics with call limits).

- EVM: permissioned workflows are enforced via per‑label address allowlists with function‑level constraints (contract address + selector/hash), no tokens are minted.

The Processor receives a message batch and executes the contained messages in sequence.

- CosmWasm: maintains High/Medium priority FIFO queues of subroutines and exposes a permissionless

tickto process batches with retry/expiration handling. - EVM: the currently implemented Lite Processor executes immediately on receipt (no queues/insert/evict/retry), while a full queued Processor is scaffolded but not implemented.

graph LR; User --> |Subroutine| Auth(Authorizations) Auth --> |Message Batch| P(Processor) P --> |Function 1| S1[Library 1] P --> |Function 2| S2[Library 2] P --> |Function N| S3[Library N]

Introduction to Valence ZK

The Valence Protocol provides Zero-Knowledge proofs and a dedicated ZK Coprocessor system to enhance its capabilities, particularly in areas requiring complex computation, privacy, and verifiable off-chain operations. This ZK integration allows Valence to bridge the gap between the rich, flexible environment of off-chain processing and the trust-minimized, verifiable nature of blockchain execution.

At a high level, ZK proofs enable one party (the prover, in this case, the ZK Coprocessor) to prove to another party (the verifier, typically on-chain smart contracts) that a certain statement is true, without revealing any information beyond the validity of the statement itself. In Valence, this means that computationally intensive or private tasks can be executed off-chain by a "guest program" running on the ZK Coprocessor. This guest program produces a result along with a cryptographic proof attesting to the correctness of that result according to the program's logic.

This proof, which is relatively small and efficient to check, is then submitted to the Valence smart contracts on-chain. The on-chain contracts only need to verify this succinct proof to be assured that the off-chain computation was performed correctly, rather than having to re-execute the entire complex computation themselves. This model brings several advantages, including reduced gas costs, increased transaction throughput, the ability to handle private data, and the capacity to implement more sophisticated logic than would be feasible purely on-chain.

Key terms you will encounter in this documentation include:

- ZK Coprocessor: An off-chain service responsible for running "guest programs" and generating ZK proofs of their execution.

- Guest Program: A piece of software designed by developers for off-chain execution on the ZK Coprocessor. It comprises two main parts: the ZK Circuit (which defines the core ZK-provable computations) and the Controller (Wasm-compiled logic that prepares inputs for the circuit, handles its outputs, and interacts with the Coprocessor environment).

- zkVM (Zero-Knowledge Virtual Machine): An environment that can execute arbitrary programs and produce a ZK proof of that execution. The Valence ZK Coprocessor leverages such technology (e.g., SP1) to run guest programs.

- Encoders: Systems that compress blockchain state into formats suitable for ZK proofs. The Unary Encoder handles single-chain state transitions, while the Merkleized Encoder manages cross-chain state dependencies.

- Proof: A small piece of cryptographic data that demonstrates a computation was performed correctly according to a specific program, without revealing all the details of the computation.

- Public Inputs/Outputs: The specific data points that are part of the public statement being proven. The ZK proof attests that the guest program correctly transformed certain public inputs into certain public outputs.

- Witness: The complete set of inputs, both public (known to prover and verifier) and private (known only to the prover), required by a ZK circuit to perform its computation and allow the generation of a proof. The ZK proof demonstrates that the computation was performed correctly using this witness, without revealing the private inputs.

This set of documentation will guide you through understanding how this ZK system works within Valence, how to develop your own guest programs for the Coprocessor, and how to integrate these ZK-proven results with the on-chain components of the Valence Protocol. For detailed information on how blockchain state is encoded for ZK proofs and cross-chain coordination, see State Encoding and Encoders.

Valence ZK System Overview

The Valence Zero-Knowledge (ZK) system facilitates the execution of complex or private computations off-chain, with their correctness verified on-chain through cryptographic proofs. This overview describes the primary components and the general flow of information and operations within this system. The foundational concepts of ZK proofs in Valence are introduced in Introduction to Valence ZK.

At its core, the system integrates an off-chain ZK Coprocessor Service with on-chain smart contracts, primarily the Authorization and VerificationRouter contracts. A key technical challenge is encoding blockchain state into formats suitable for zero-knowledge proofs, enabling pure functions to operate on committed state transitions. For detailed information on state encoding mechanisms and cross-chain coordination, see State Encoding and Encoders.

Component Roles

The Valence ZK system comprises several key components, each with distinct responsibilities.

The ZK Coprocessor Service, operating off-chain, is a persistent service that manages ZK "guest programs." It deploys new guest programs, executes them with specific inputs, manages proving using an underlying zkVM (like SP1), and makes generated proofs available. Developers interact with this service to deploy ZK applications and initiate proof generation.

A Guest Program is application-specific code developed by users. It consists of two parts: the Controller (Wasm-compiled Rust code running in the Coprocessor's sandbox) takes input data, processes it to generate a "witness" for the ZK circuit, and coordinates proof generation. The ZK Circuit (e.g., SP1 circuit) performs the core computation and assertions, taking the witness and producing a proof and public output (Vec<u8>) that forms the primary data for on-chain contracts.

The Authorization contract serves as the entry point for submitting ZK proofs for verification. It handles ZK-specific authorization logic, checking if proof submitters are authorized for given ZK programs (by registry ID) and managing replay protection.

The VerificationRouter contract performs actual cryptographic verification of ZK proofs. This contract uses an immutable append-only design where routes map to specific verifier contracts. The Authorization contract stores the Verification Keys (VKs) and verification routes, then delegates verification to the VerificationRouter, which routes the proof to the appropriate verifier. For SP1 proofs, the SP1VerificationSwitch performs dual verification of both the program proof and domain proof.

ZK Program Flows

The following diagrams illustrate the key workflows in the Valence ZK system:

Deployment Flow

Developers prepare and register their ZK applications, initializing the application before execution. They build guest program components, deploy them to the coprocessor service (e.g., via cargo-valence), and register verification keys on-chain.

graph TD

Dev[Developer Machine<br/>- Develops Guest Program<br/>- Builds Controller + Circuit] -- Deploys via cargo-valence --> Coproc[ZK Coprocessor Service]

Dev -- Registers Verification Key --> OnChain[On-Chain Contracts<br/>Authorization + VerificationRouter]

Coproc -- Assigns --> CID[Controller ID]

OnChain -- Associates --> RegID[Registry ID]

classDef dev fill:#e3f2fd

classDef onchain fill:#f3e5f5

classDef service fill:#e8f5e8

classDef data fill:#fff3e0

class Dev dev

class OnChain onchain

class Coproc service

class CID,RegID data

Runtime Flow

Strategists execute ZK-proven actions on-chain through an operational process. They request proof generation from the coprocessor and then submit verified proofs to trigger on-chain execution.

graph TD

Strategist[Strategist<br/>- Requests proof generation<br/>- Retrieves proof<br/>- Submits ZKMessage] -- Requests proof generation --> Coproc[ZK Coprocessor Service]

Coproc -- Executes Guest Program --> Proof[ZK Proof + Public Output]

Strategist -- Retrieves proof --> Coproc

Strategist -- Submits ZKMessage + Proof --> OnChain[On-Chain Contracts<br/>Authorization + VerificationRouter]

OnChain -- Verifies proof --> Valid{Proof Valid?}

Valid -- Yes --> Processor[Processor Contract]

Processor -- Executes validated message --> State[Blockchain State Changes]

classDef strategist fill:#fff8e1

classDef service fill:#e8f5e8

classDef onchain fill:#f3e5f5

classDef data fill:#fff3e0

classDef decision fill:#ffebee

class Strategist strategist

class Coproc service

class OnChain,Processor onchain

class Proof,State data

class Valid decision

Operation

The process of executing an off-chain computation and verifying it on-chain generally follows a sequence of interconnected steps.

Development and Deployment

During development and deployment (off-chain), a developer creates a guest program, defining its Controller and ZK circuit. This guest program is compiled (Controller to Wasm, circuit to target representation) and deployed to the ZK Coprocessor service using cargo-valence CLI, which assigns a unique Controller ID. The Verification Key (VK) for the circuit is registered in an on-chain Authorization.sol contract and associated with a registry ID that the Authorization contract uses.

Proof Request and Generation

Proof request and generation (off-chain) is initiated when an off-chain entity requests the ZK Coprocessor service to execute the deployed guest program with specific input data using cargo-valence CLI. The Coprocessor runs the Controller, which generates the necessary witness for the ZK circuit. The circuit executes with this witness, and the Coprocessor generates the ZK proof and circuit's public output. The Controller may store this proof and output in its virtual filesystem.

Proof Submission and Verification

Following proof generation, the off-chain entity retrieves the ZK proof and circuit's public output from the Coprocessor. It constructs a ZKMessage, which includes the circuit's public output (forming the processorMessage), the registry ID, a blockNumber for replay protection, and the target authorizationContract address. This ZKMessage and ZK proof are submitted to the executeZKMessage function of the Authorization contract.

On-Chain Processing

This triggers on-chain processing. The Authorization contract performs initial checks, verifying sender authorization for the registry ID and ensuring replay protection using the blockNumber. If checks pass, it calls the verify function on the VerificationRouter, passing the ZK proof and public inputs. The VerificationRouter fetches the correct verifier from the route provided by the Authorization contract and delegates the proving to this verifier, passing the vk, proof, public inputs and a payload.

Execution of Proven Action

Upon successful proof verification, the Authorization contract considers the ZKMessage contents (specifically the processorMessage) authentic and authorized. It dispatches this processorMessage to the appropriate Valence Processor contract for execution, leading to blockchain state changes based on the ZK-proven off-chain computation.

This system allows Valence to securely integrate complex off-chain logic with its on-chain operations, opening up a wide range of advanced application possibilities.

End‑to‑End Flow (Services + On‑Chain)

Actors and services:

- Coprocessor service: runs controllers, manages storage/FS, computes proofs

- Domain prover service: produces recursive domain proofs and publishes latest state

- Domain implementations (e.g., Ethereum): controller, circuit, optional light‑client

- Prover backend: SP1 prover infrastructure

- Clients: cargo‑valence CLI or valence‑domain‑clients library/binary

- On‑chain: Authorization, VerificationRouter, and Processor

Flow:

- Domain prover updates: The domain prover ingests historical updates and produces a recursive wrapper proof, publishing the latest State (and wrapper VK) for consumers (see Domain Proofs).

- Pinning: Clients fetch the current Coprocessor root and pin requests with

valence-coprocessor-rootto ensure consistent openings. - Witness building: Guest programs request domain state proofs through the Coprocessor runtime (e.g.,

get_state_proof("ethereum", args)). - Proving: The Coprocessor combines witnesses with historical openings and computes the zkVM proof. A store payload directs the controller to write the proof to the virtual filesystem.

- Retrieval: Clients poll the FS path and retrieve the proof + public inputs (first 32 bytes = Coprocessor root; remainder = circuit output).

- On‑chain verification: The actor submits proof + inputs to Authorization, which delegates to VerificationRouter (e.g., SP1VerificationSwitch) with the appropriate VK and route.

- Execution: On successful verification, Authorization forwards the processorMessage to Processor, which applies state changes.

Developing Valence Coprocessor Apps

This guide is designed for developers looking to build Zero-Knowledge (ZK) applications, or "guest programs," for the Valence ZK Coprocessor. It focuses on using the valence-coprocessor-app template as a foundation. Before diving in, it is beneficial to have a grasp of the concepts presented in Introduction to Valence ZK and Valence ZK System Overview.

The valence-coprocessor-app template repository serves as the primary starting point and practical reference for this guide.

Core Structure of a Coprocessor App

A Valence Coprocessor App (a Guest Program), when based on the template, is primarily structured around two main Rust crates, which compile into the two logical parts of the Guest Program: the Controller and the ZK Circuit.

-

The

controllerCrate (compiles to the Controller): This component contains off-chain logic executed as Wasm within the Valence ZK Coprocessor's sandboxed environment. This Controller acts as an intermediary between user inputs and the ZK circuit. Key responsibilities include receiving input arguments (often JSON) for proof requests, processing inputs to generate a "witness" (private and public data the ZK circuit needs), and interacting with the Coprocessor service to initiate proof generation. The Controller handles proof computation results; it has an entrypoint function the Coprocessor calls upon successful proof generation, allowing the Controller to store the proof or log information. The Controller can utilize a virtual filesystem provided by the Coprocessor, which is FAT-16 based (implying constraints like 3-character file extensions and case-insensitive paths), for persistent data storage. -

The

circuitCrate (defines the ZK Circuit): This crate defines the ZK Circuit itself. The ZK Circuit is the heart of the ZK application, containing the actual computations and assertions whose correctness will be proven. It's typically written using a specialized language or Domain-Specific Language (DSL) that compiles down to a ZK proving system supported by the Coprocessor (for example, SP1). The ZK Circuit receives the witness data prepared by the Controller. It then performs its defined computations and assertions. If all these pass, it produces a public output (as aVec<u8>), which represents the public statement that will be cryptographically verified on-chain. This output forms a crucial part of the "public inputs" of the ZK proof.

While these two crates form the core, the template might also include an optional ./crates/domain crate. This is generally intended for more advanced scenarios, such as defining how to derive state proofs from JSON arguments or for validating block data that might be incorporated within the Coprocessor's operations, though its direct use can vary significantly depending on the specific application's needs.

General Development Workflow

Developing a Coprocessor App typically follows a sequence of steps from setup to deployment and testing:

-

Environment Setup: The initial step involves preparing your development environment. This requires installing Docker, a recent Rust toolchain, and the Cargo Valence subcommand (the

cargo-valenceCLI included in this repository). You would then clone thevalence-coprocessor-apptemplate repository to serve as the foundation for your new ZK application. For development, you can either use the public Valence ZK Coprocessor service athttps://service.coprocessor.valence.zone(default socket) or optionally run a local instance. -

ZK Circuit Development (

./crates/circuit): The next phase is to define the logic of your ZK circuit. This involves specifying the exact computations to be performed, the private inputs (the witness) that the circuit will consume, and the public inputs or outputs it will expose. The public output of your ZK circuit (aVec<u8>) is of particular importance, as this is the data that will ultimately be verified on-chain. It's essential to remember that the first 32 bytes of the full public inputs (as seen by the on-chain verifier) are reserved by the Coprocessor for its own internal root hash; your application-specific public output data will follow these initial 32 bytes. -

Controller Development (

./crates/controller): Concurrently, you'll develop the Controller logic within thecontrollercrate. This includes implementing the logic to parse incoming JSON arguments that are provided when a proof is requested for your application. You will also need to write the code that transforms these user-provided arguments into the precise witness format required by your ZK circuit. A key part of the Controller is its entrypoint function; this function is called by the Coprocessor service when a proof for your program has been successfully generated and is ready. This entrypoint typically receives the proof itself, the initial arguments that triggered the request, and any logs generated during the process. You must also implement how your Controller should handle this generated proof – a common pattern is to store it to a specific path (e.g.,/var/share/proof.bin) within its virtual filesystem using astorecommand payload directed to the Coprocessor. -

Application Build and Deployment: Once the ZK Circuit (from

circuitcrate) and Controller (fromcontrollercrate) are developed, build and deploy your Guest Program using thecargo-valenceCLI. Example:cargo-valence deploy circuit --controller ./crates/controller --circuit <circuit-crate-project-name>The CLI defaults to

https://service.coprocessor.valence.zone; specify--socket <url>if targeting a different endpoint. This compiles both crates (Controller to Wasm) and submits them to the service. On success, the service returns a controller ID (e.g.,8965...df783) used in subsequent requests. -

Requesting Proof Generation: With your Guest Program deployed and its Controller ID known, request proving with:

cargo-valence prove -j '{"value": 42}' -p /var/share/proof.bin <CONTROLLER_ID>Replace the JSON with the expected controller input. The

-ppath tells the controller where to store the resulting proof within the virtual filesystem. The CLI encapsulates this as a payload{ cmd: "store", path: "/var/share/proof.bin" }, which the service passes to the controller entrypoint after proving. -

Retrieving Proofs and Public Inputs: After proving completes and the proof is stored by your controller, retrieve it with:

cargo-valence storage -p /var/share/proof.bin <CONTROLLER_ID> | jq -r '.data' | base64 -d | jqTo view the public inputs:

cargo-valence proof-inputs -p /var/share/proof.bin <CONTROLLER_ID> | jq -r '.inputs' | base64 -d | hexdump -CThe first 32 bytes represent the Coprocessor root; your circuit output follows.

This workflow allows for an iterative development process, enabling you to test and refine your ZK guest programs effectively.

Note: As an alternative to cargo-valence, you can use the valence-coprocessor binary from the domain clients toolkit to call the same REST API directly. Both approaches interact with the Coprocessor using the endpoints and payload conventions described in Coprocessor Internals → Service API.

Client Library Usage

The valence-domain-clients crate provides a Coprocessor client and helpers that call the REST API, submit proving jobs, and poll the virtual filesystem for generated proofs.

- Default base URL:

https://service.coprocessor.valence.zone - REST base path:

/api - Typical flow:

- Submit a prove request with a “store” payload specifying a virtual filesystem path.

- Poll the storage file endpoint until the proof appears.

- Decode the proof and extract public inputs for on‑chain submission.

Headers used by clients (see Coprocessor Internals → Service API for details):

valence-coprocessor-circuit: hex controller IDvalence-coprocessor-root: historical root hex to pin requestsvalence-coprocessor-signature: optional signature over JSON body

Example (async Rust):

use serde_json::json; use valence_domain_clients::clients::coprocessor::CoprocessorClient; #[tokio::main] async fn main() -> anyhow::Result<()> { let client = CoprocessorClient::default(); let circuit = "<controller_id_hex>"; let root = client.root().await?; // optional pin to current // Submit prove (with store payload) and poll storage for the proof let args = json!({ "value": 42 }); let proof = client.get_single_proof(circuit, &args, &root).await?; // Decode base64 proof and inputs let (_proof_bytes, inputs) = proof.decode()?; println!("inputs length: {}", inputs.len()); Ok(()) }

Incorporating Verifiable External State

Guest programs on the Valence Coprocessor can be designed to utilize verifiable state from external blockchains, like Ethereum. This allows ZK applications to react to or incorporate off-chain data in a trust-minimized way. Services such as the state proof service facilitate this by generating state proofs (e.g., Merkle proofs for account balances or storage slots on Ethereum at specific block heights). Currently, this interaction for fetching external state is often achieved via ABI-encoded HTTP calls, though future implementations might support other protocols like WebSockets.

When developing a guest program, you would design its Controller (within the controller crate) to accept such state proofs as part of its input. The ZK circuit can then use the proven external state in its computations. The resulting ZK proof from the Valence Coprocessor will thus attest to the correctness of operations performed on this externally verified data. More detailed architectural considerations for this pattern, including how the Coprocessor environment might support or interact with such external proofs, are discussed in ZK Coprocessor Internals.

Apps ownership

The apps will be owned by a private key, only if their deployment is signed by the client. The Valence Domain client employs an environment variable VALENCE_SIGNER for specifying its secret key during signature processes. When a signature becomes available for a deployed app, the controller bytecode, storage, and dedicated prover list will only be modifiable upon signing a request using the provided secret key.

The initial phase involves installing the valence-coprocessor binary:

cargo install valence-domain-clients \

--no-default-features \

--features coprocessor-bin \

--bin valence-coprocessor

You can verify the installation by running:

valence-coprocessor --version

To create the signer key, utilize the foundry tool:

cast wallet new --account valence

The private key can be retrieved as follows:

cast wallet private-key --account valence

An easy way to store it in the appropriate environment variable:

export VALENCE_SIGNER='{"SecretEccNistP256":"'$(cast wallet private-key --account valence)'"}'

This readies the environment for utilizing an EccNistP256 signer. Every invocation of the valence-coprocessor binary will leverage such environment variable and sign the requests accordingly.

To view the allocated GPU workers associated with the key:

$ valence-coprocessor provers get

Using valence signer `EccNistP256(...)`...

Fetching provers...

{"owned":[],"public":["wss://prover.coprocessor.valence.zone"]}

The user may assign a specific prover to their app:

valence-coprocessor provers add 'wss://prover.coprocessor.valence.zone'

The co-processor will cycle through the available dedicated GPU provers of the app to generate proofs.

Integrating ZK Proofs with On-Chain Contracts

This document details the process of integrating Zero-Knowledge (ZK) proofs, generated by a Valence Coprocessor guest program, with the Valence Protocol's on-chain smart contracts. It assumes an understanding of the ZK system as outlined in Valence ZK System Overview and how guest programs are developed as described in Developing Valence Coprocessor Apps.

The core of on-chain integration revolves around submitting the ZK proof and its associated public data to the Authorization contract (CosmWasm or EVM), which then collaborates with a VerificationRouter to cryptographically verify the proof's authenticity and correctness. For SP1 proofs, the system uses an SP1VerificationSwitch that performs dual verification: validating both the program proof (using the provided VK) and the domain proof (using the Coprocessor root commitments).

Preparing Data for On-Chain Submission

After your guest program successfully executes on the ZK Coprocessor and a proof is generated, two key pieces of data are essential for on-chain interaction:

- The ZK Proof: This is the raw cryptographic proof data (e.g., SP1 proof bytes) generated by the Coprocessor, attesting to the correct execution of your guest program's ZK circuit.

- The Circuit's Public Output: Your ZK circuit is designed to produce a public output (

Vec<u8>). This output is critical because it represents the data that, once proven correct by the ZK proof, will be used to form theprocessorMessagefor execution by the on-chain Valence Processor contract. When the full "public inputs" are presented to the on-chain verifier, the first 32 bytes contain the Coprocessor Root (historical commitments). The remaining bytes are the circuit output your app defines. For how domain and historical openings bind values to this root, see Domain Proofs.

An off-chain system, such as a script, bot, or backend service, is responsible for retrieving these pieces of data from the Coprocessor (typically after the guest program stores them in its virtual filesystem) and then initiating the on-chain transaction.

The ZKMessage Structure

To submit a ZK-proven action to the Valence Protocol, the off-chain system must construct a ZKMessage. This structure is specifically designed for the executeZKMessage function within the Authorization.sol contract. The ZKMessage encapsulates all necessary information for the on-chain contracts to process the request:

| Field | Type | Description |

|---|---|---|

registry | uint64 | Unique identifier for the deployed ZK guest program. Maps to ZK authorization registry in the Authorization contract. |

blockNumber | uint64 | Current or recent block number for replay protection. Prevents reuse of old proofs if validateBlockNumberExecution is enabled for the registry. |

authorizationContract | address | Address of the target Authorization contract. Can be address(0) to allow any authorization contract, or specific address for binding. |

processorMessage | bytes | Core payload dispatched to Processor contract if ZK proof is valid. Contains the actual message to be executed, derived from the circuit's public output. |

On-Chain Verification Sequence

Once the ZKMessage is constructed and the ZK proof is obtained, the off-chain system submits these to the executeZKMessage function of the Authorization.sol contract. The on-chain processing then unfolds as follows:

-

Initial Checks: The Authorization contract first performs several preliminary checks. It verifies if the

msg.sender(the account submitting the transaction) is authorized to provide proofs for the givenregistryID. It also typically checks theblockNumberfrom theZKMessageagainst its record of the last executed block for thatregistryto prevent replay attacks. -

Delegation to VerificationRouter: If the initial checks pass, the Authorization contract delegates the task of cryptographic proof verification to the VerificationRouter contract whose address it has been configured with using a

route. It calls averifyfunction on the router, passing along the ZK proof, the verifying key (VK), the public inputs for the proof and a payload. -

Proof Verification: The VerificationRouter retrieves the verifier associated by the route and delegates the verification to that verifier. For SP1 proofs, the

SP1VerificationSwitchperforms dual verification:- Program Proof: Uses the provided VK to verify the circuit's computation with your specific public inputs

- Domain Proof: Uses the stored

domainVKto verify the first 32 bytes (coprocessor root hash) which acts as a commitment to all Coprocessor state integrity

The Coprocessor Root hash implicitly contains all embedded state proofs of domains relevant to the ZK proof, managed via a Sparse Merkle Tree (SMT). Every new block appended to chains relevant to the proof's domain is included in this SMT with a ZK domain proof, and the verifications of these inclusions are cryptographically embedded into this root. Both proofs must pass for successful verification. If valid, the router returns a success status to the Authorization contract.

-

Dispatch to Processor: If the VerificationRouter confirms the proof's validity, the Authorization contract considers the

processorMessagewithin theZKMessageto be authentic and authorized for execution. It then typically updates its state for replay protection (e.g., storing theblockNumberas the last executed for thatregistry) and dispatches theprocessorMessageto the appropriate Valence Processor contract. -

Execution by Processor: The Processor contract receives the

processorMessageand executes the sequence of on-chain actions (e.g., calls to various Valence Libraries or other smart contracts) as defined within that message. This is where the result of your ZK-proven off-chain computation translates into tangible on-chain state changes.

This integration pathway ensures that off-chain computations, once proven correct by the ZK Coprocessor, can be securely and reliably acted upon by the Valence on-chain contracts.

Verifying Keys

- Guest program VKs can be fetched from the Coprocessor via

GET /api/circuit/vk(base64) for a given controller context. - For recursive domain proofs, a domain prover service publishes a stable wrapper VK (e.g., via

/api/consts). On‑chain verifiers can bind to this VK and the expected controller ID.

ZK Coprocessor Internals

This document provides an in-depth look into the internal architecture and operational mechanics of the Valence ZK Coprocessor service. It is intended for those who wish to understand more about the Coprocessor's design beyond the scope of typical application development. Familiarity with Valence ZK System Overview is assumed.

The Valence ZK Coprocessor is designed as a persistent off-chain service that registers and executes Zero-Knowledge (ZK) guest applications.

Service Architecture

The Coprocessor service consists of several coordinated components that work together to provide a complete ZK execution environment. It's important to note a key architectural separation: the coprocessor itself (which handles API requests, controller execution, and virtual filesystem management) is distinct from the prover. While they work in tandem, they can be deployed and scaled independently. For instance, Valence runs a dedicated, high-performance prover instance at prover.timewave.computer:37282. Coprocessor instances, including those run locally for development, can connect to this remote prover (typically requiring a VALENCE_PROVER_SECRET for access). This separation also allows developers to run a local coprocessor instance completely isolated from a real prover, using mocked ZK proofs. This is invaluable for rapid iteration and debugging of controller logic without incurring the overhead of actual proof generation.

The main components of the Coprocessor service include:

The API Layer serves as the primary external interface, exposing REST endpoints (typically on port 37281 for the coprocessor service itself) for core operations. Developers can deploy guest programs by submitting controller and circuit bundles, they can request proofs for deployed programs, query the status of ongoing tasks, and retrieve data stored in the virtual filesystem such as generated proofs or execution logs.

Request Management & Database - This component validates incoming requests and queues them for processing. It maintains persistent storage for deployed guest program details including Controller IDs, circuit specifications, and controller bundles, while also tracking proof generation status and execution metadata.

The Controller Executor / Sandbox provides an isolated execution environment for controller crate logic. This sandbox runs a WebAssembly runtime for controller code and provides a crucial interface that allows controllers to signal when witness preparation is complete and proof generation should commence. Controllers can also perform filesystem operations through this interface.

Proving Engine Integration - Orchestrates the actual ZK proof generation process using underlying zkVM systems like SP1 or Groth16. This component manages prover resources, handles the translation of circuits and witnesses into the required formats for specific proving backends, and processes the resulting proof data and public outputs.

The Virtual Filesystem Manager allocates FAT-16 based virtual filesystems to each guest program, enabling controllers to store proofs and logs through store commands. This filesystem has certain limitations on filename length and character sets that developers must consider.

The Coprocessor Process

Coprocessor Root Hash is a notable internal detail where the Coprocessor prepends a 32-byte hash to application-specific public outputs from the ZK circuit. This combined data forms the complete "public inputs" that are cryptographically bound to the proof, ensuring that proofs are tied to the specific Coprocessor instance that produced them. On-chain verifiers must account for this structure when validating proofs.

Task Lifecycle involves proof generation requests progressing through several distinct stages: initial queuing, controller execution for witness generation, circuit proving, and finally proof delivery back to the controller entrypoint. The API provides mechanisms to track task status throughout this lifecycle.

Persistent Job Queues enable the Coprocessor service to handle multiple concurrent proof requests efficiently and reliably through persistent job queues, and worker nodes for computationally intensive proving tasks.

Handling Verifiable State Proofs

Guest programs can incorporate state from external blockchains through a structured integration pattern that enhances their capabilities significantly.

External State Proof Services, such as the eth-state-proof-service, connect to external chains via RPC, query desired state at specific block heights, and construct Merkle proofs relative to known block hashes. These services play a crucial role in bridging external blockchain data into the ZK environment.

The guest program integration follows a clear pattern. During proof ingestion, the controller receives external state proofs via JSON payloads and extracts state values along with relevant metadata like block hashes. In the witness preparation phase, the controller incorporates this external state into the witness for the ZK circuit. The circuit logic then performs computations using the external state data, with the option to verify external proofs directly within the circuit for stronger security guarantees.

Trust Model Considerations - The ZK proof fundamentally attests that given a set of provided inputs (which may include externally proven state at the latest block height), the circuit executed correctly to produce the specified outputs. The Coprocessor provides a state proof interface for each chain that exposes a light client prover wrapped in a recursive circuit. All light client circuits are initialized at a trusted height, where block hash and committee composition are taken as "weakly subjective" public inputs.

Service API (Access & Discovery)

The Coprocessor serves an OpenAPI/Swagger UI and specification alongside its REST endpoints.

You can programmatically discover available routes by fetching the spec. For example, to list available paths:

curl -s https://service.coprocessor.valence.zone/spec | jq -r '.paths | keys[]'

# or against local:

curl -s http://127.0.0.1:37281/spec | jq -r '.paths | keys[]'

Notes

- Virtual filesystem is FAT‑16 emulated; file extensions must be ≤ 3 characters, paths are case‑insensitive.

- The

payloadin proving requests is commonly{ "cmd": "store", "path": "/var/share/proof.bin" }to instruct the controller to store the generated proof.

Related Services

Domain prover services publish recursive proofs and a stable wrapper VK for domains. For how domain and historical proofs are modeled (and how the domain prover feeds the Coprocessor and on‑chain verification), see Domain Proofs. For domain implementation patterns, see State Encoding and Encoders.

Client Conventions

When calling the Coprocessor, clients use a few standard conventions:

Headers

valence-coprocessor-circuit: hex controller ID (context)valence-coprocessor-root: historical root hex (pinning to a known SMT root)valence-coprocessor-signature: optional signature over JSON body (if a signer is configured)

Prove payload

- Include a “store” payload to direct the controller to write the generated proof to the virtual filesystem, for example:

{ "args": { … }, "payload": { "cmd": "store", "path": "/var/share/proofs/<id>.bin" } }

Virtual filesystem

- FAT‑16 emulation with 3‑character file extensions and case‑insensitive paths. A common pattern is to store under

/var/share/proofs/….

Public inputs layout

- The public inputs buffer starts with a 32‑byte Coprocessor Root, followed by the circuit‑defined output bytes used on‑chain.

Sparse Merkle Trees in Valence

A sparse Merkle tree (SMT) is a specialized Merkle tree with leaf indices defined by an injective function from predefined arguments. The verification key of a ZK circuit, being injective to the circuit's definition, serves as an index for available programs.

Since ZK proofs are uniquely tied to their verification keys, we can use these keys to index and organize proofs from different programs. This makes each verification key a unique identifier for its corresponding proof within the collection.

Merkle Tree

A Merkle tree is an authenticated data structure consisting of leaves and nodes that form a tree shape. Each node in this tree represents the cryptographic hash of its children, while the leaves hold an arbitrary piece of data—usually the hash value of some variable input.

For a hash function H, if we insert data items A, B, C into a Merkle tree, the resulting structure would look like:

graph TB

%% Root node

r["R := H(t10, t11)"]

%% Level 1

m1["t10 := H(t00, t01)"] --> r

m2["t11 := H(t02, t03)"] --> r

%% Level 2

c1["t00 := H(A)"] --> m1

c2["t01 := H(B)"] --> m1

c3["t02 := H(C)"] --> m2

c4["t03 := 0"] --> m2

Figure 1: Basic Merkle tree structure. The node labeling uses a coordinate system where the first digit represents the tree level (0 = leaves, 1 = intermediate nodes, etc.) and the second digit represents the position at that level. Each parent node is computed as the hash of its two children in this binary tree structure.

Membership Proof

A Merkle tree serves as an efficient data structure for validating the membership of a leaf node within a set in logarithmic time, making it especially useful for handling large sets and well-suited for random insertion patterns. A Merkle opening (or Merkle proof) represents an array of sibling nodes that outline a Merkle Path leading to a commitment Root. If the verifier possesses the root and employs a cryptographic hash function, the pre-image of the hash is non-malleable; in a cryptographic hash, it's unfeasible to discover a set of siblings resulting in the root, except for the valid inputs. Given that the leaf node is known to the verifier, a Merkle Proof will consist of a sequence of hashes leading up to the root. This allows the verifier to compute the root value and compare it with the known Merkle root, thereby confirming the membership of any provided alleged member without relying on the trustworthiness of the source. Consequently, a single hash commitment ensures that any verifier can securely validate the membership of any proposed member supplied by an untrusted party.

To prove that C is in the tree, the Merkle proof includes the sibling nodes along the path from C to the root: [t03, t10]. So the verifier, that knows R beforehand, will compute:

t02 := H(C)t11 := H(t02, t03)R' := H(t10, t11)

If R == R', then C is a member of the set.

Note that the depth of the tree is the length of its Merkle opening, that is: we open up to a node with depth equal to the length of the proof.

Sparse Data

Let's consider a public function f that accepts a member and returns a tuple. This tuple consists of the index within the tree as a u64 value, and the hash of the leaf: (i, h) = f(X).

For the example above, let's assume two members:

(3, a) := f(A)(1, b) := f(B)

graph TB

%% Root node

r["R := H(t10, t11)"]

%% Level 1

m1["t10 := H(t00, t01)"] --> r

m2["t11 := H(t02, t03)"] --> r

%% Level 2

c1["t00 := 0"] --> m1

c2["t01 := b"] --> m1

c3["t02 := 0"] --> m2

c4["t03 := a"] --> m2

The primary distinction of a sparse Merkle tree lies in the deterministic leaf index, making it agnostic to input order. In essence, this structure forms an unordered set whose equivalence remains consistent irrespective of the sequence in which items are appended.

In addition to membership proofs sparse Merkle trees also support generating proofs of non-membership. To achieve this, we carry out a Merkle opening at the specified target index, and expect the returned value to be 0.

Let's assume a non-member X to be (0, x) := f(X). To verify that X is not in the tree, given the root R and the non-membership proof [b, t11], the verifier:

- Computes

(0, x) := f(X)to find whereXshould be located (index 0) - Reconstructs

t10 := H(0, b)using the empty slot (0) and siblingb - Computes the root

R' := H(t10, t11)and checks ifR' == R

If R == R', then 0 is at the slot of X. Since we know X to not be the pre-image of 0 in H, then X is not a member of the tree.

The Valence SMT

Valence's sparse Merkle tree is designed to utilize the hash of the verifying key generated by the ZK circuit as its index. The tree's leaf data will encompass the proof and input arguments for the ZK program. For this particular implementation, we can consider the input arguments as a generic type, which will be specifically defined during development. These input arguments will constitute the key-value pairs that define a subset of the contract state essential for state transition. The proof will be a vector of bytes.

The tree depth is adaptive, representing the smallest value required to traverse from a leaf node to the root, given the number of elements involved. This approach avoids writing nodes that contain unused entries. So if a tree contains two adjacent nodes indexed at [(0,0), (0,1)], the Merkle opening will contain a single element: the sibling leaf of the validated node.

If the tree comprises two nodes with indices [(0,0), (0,2)], the Merkle opening will have two elements, allowing for a complete traversal from the leaves to the root.

Precomputed Empty Subtrees

The Valence SMT implementation includes a precomputed set of empty subtrees based on the selected hash primitive. For example, when a tree contains only empty nodes, all hash values are constant:

graph TB

%% Root node

r["R := H(t10, t11)"]

%% Level 1

m1["t10 := H(t00, t01)"] --> r

m2["t11 := H(t02, t03)"] --> r

%% Level 2

c1["t00 := 0"] --> m1

c2["t01 := 0"] --> m1

c3["t02 := 0"] --> m2

c4["t03 := 0"] --> m2

In practice, sparse Merkle trees often have many empty positions, especially when the index space is large but only a few positions are occupied. This creates opportunities for optimization through precomputation.

Consider a more realistic scenario where we have a sparse tree with a single leaf X at index 2:

graph TB

%% Root

r["R := H(t20, K2)"]

%% Level 1

t20["t20 := H(K1, t11)"] --> r

t21["K2"] --> r

%% Level 2

m1["K1"] --> t20

m2["t11 := H(X, K0)"] --> t20

%% Level 3

c3["X"] --> m2

c4["K0"] --> m2

Rather than computing (K0, K1, K2) each time, these values can be precomputed since they represent known constants: K0 := H(0), K1 := H(K0, K0), K2 := H(K1, K1).

By using SMTs, Valence can efficiently manage and verify large collections of authenticated data, including ZK proofs from coprocessor applications and commitments to program states.

Valence ZK Guest Environment

This document describes the specific execution environment provided by the Valence Coprocessor for "guest applications." Understanding this environment is crucial for developers building robust and efficient ZK applications. It complements the information found in Developing Valence Coprocessor Apps.

When a guest program's controller crate logic is executed by the Valence ZK Coprocessor, it runs within a specialized, sandboxed environment. This environment imposes certain characteristics and provides specific interfaces for interaction.

Execution Sandbox

The primary purpose of the sandbox is to securely execute the guest program's Rust code (often compiled to WebAssembly or a similar intermediate representation) that is responsible for generating the witness for the ZK circuit. This isolation prevents a guest program from interfering with the Coprocessor service itself or other concurrently running guest programs.

While the exact nature of the sandbox can evolve, developers should assume an environment with constrained resources. This means that overly complex or long-running computations within the controller crate (before handing off to the ZK circuit for proving) should be approached with caution. The main computationally intensive work should ideally be designed into the ZK circuit itself, as that is what the proving system is optimized for.

Virtual Filesystem

Each deployed guest program is provided with its own private virtual filesystem by the Coprocessor. This filesystem is essential for storing intermediate data, logs, and most importantly, the generated ZK proofs.

Key characteristics and limitations of this virtual filesystem, as indicated by the valence-coprocessor-app template examples, include:

- FAT-16 Basis: The underlying structure often emulates a FAT-16 filesystem. This implies certain legacy constraints that developers must be aware of.

- Extension Length: File extensions are typically limited to a maximum of three characters (e.g.,

.bin,.txt,.log). - Case Insensitivity: File and directory names are generally treated as case-insensitive (e.g.,

Proof.binandproof.binwould refer to the same file). - Path Structure: Paths are typically Unix-like (e.g.,

/var/share/my_proof.bin). - Interaction: The

controllercrate interacts with this filesystem by sending specific commands to the Coprocessor service rather than through direct OS-level file I/O calls. For example, to store a generated proof, thecontrollerconstructs astorecommand with the target path and data, which the Coprocessor then writes to the program's virtual disk image.

Developers should design their controller logic to work within these constraints, particularly when choosing filenames for storing proofs or other outputs.

Interfacing with the Coprocessor Service

From within its sandboxed execution, the controller crate logic needs to communicate with the host Coprocessor service for several key operations:

- Signaling Witness Readiness: After processing inputs and preparing the witness for the ZK circuit, the

controllermust inform the Coprocessor that it is ready for the proving phase to begin. - Receiving Proof Results: The Coprocessor calls a designated entrypoint function within the

controllercrate upon completion of a proof generation task (successful or failed). This entrypoint receives the proof data, initial arguments, and any logs. - Filesystem Operations: As mentioned above, storing data (like the received proof) or logging information involves sending structured requests to the Coprocessor to perform actions on the program's virtual filesystem.

The exact mechanism for this interaction (e.g., specific function calls, message passing, predefined environment variables or handles) is defined by the Coprocessor's execution environment for guest programs.

Resource Constraints

Guest applications run with finite system resources including limited memory, CPU time, and storage space. Developers should aim for efficiency in their controller crate logic, focusing on input processing, witness generation, and handling results rather than performing heavy computations that are better suited for the ZK circuit itself.

Understanding these environment constraints enables developers to build ZK applications that run efficiently on the Valence Coprocessor.

State Encoding and Encoders

This document explains how the Valence ZK Coprocessor handles state encoding for zero-knowledge proofs and cross-chain state synchronization. Understanding these concepts is essential for building applications that work across multiple blockchains.

Implementation Status: The state encoding mechanisms described in this document represent the design goals and architecture for the Valence ZK Coprocessor. While the core coprocessor infrastructure exists (as shown in the valence-coprocessor-app template), the full state encoding and cross-chain coordination features are still in active development.

The State Encoding Challenge

The core challenge in ZK coprocessor design lies in encoding state. ZK applications are pure functions that must utilize existing state as arguments to produce an evaluated output state. This means we need a way to compress blockchain state into a format suitable for zero-knowledge proofs.

For any state transition, we can describe it as a pure function: f(A) = B, where A is the initial state and B is the resulting state after applying function f.

Pure Functions in zkVMs

The Valence ZK Coprocessor leverages zero-knowledge virtual machines (zkVMs) to execute Rust programs and generate proofs of their execution. Specifically, Valence uses a RISC-V zkVM, currently Succinct's SP1. For state encoding purposes, these applications must be structured as pure functions f(x) = y.

The zkVM workflow for state transitions follows the following pattern:

- Application definition: The state transition logic is written in Rust as a pure function

- Key generation: The compiled application produces a proving key

pkand verifying keyvk - Proof generation: Given inputs

x, the zkVM callsprove(pk, x)to generate proofp - Verification: The proof is verified by calling

verify(vk, x, y, p)

This pure function constraint is what necessitates the state encoding mechanisms described in this document - we must compress mutable blockchain state into immutable inputs and outputs suitable for zero-knowledge proving.

Unary Encoder

The Unary Encoder compresses account state transitions into zero-knowledge proofs. It handles the transformation from on-chain state mutations to ZK-provable computations.

Basic State Transition Example

Consider an account with a key-value store that maps addresses to balances. A traditional on-chain transfer function might look like:

#![allow(unused)] fn main() { fn transfer(&mut self, signature: Signature, from: Address, to: Address, value: u64) { assert!(signature.verify(&from)); assert!(value > 0); let balance_from = self.get(&from).unwrap(); let balance_to = self.get(&to).unwrap_or(0); self.insert(from, balance_from.checked_sub(value).unwrap()); self.insert(to, balance_to.checked_add(value).unwrap()); } }

For ZK execution, we can create a trusted version that delegates signature verification to the ZK circuit:

#![allow(unused)] fn main() { fn transfer_trusted(&mut self, from: Address, to: Address, value: u64) { let balance_from = self.get(&from).unwrap(); let balance_to = self.get(&to).unwrap_or(0); self.insert(from, balance_from - value); self.insert(to, balance_to + value); } }

ZK Application Structure

In the current Valence Coprocessor template, ZK applications consist of two components: a controller and a circuit. The controller processes inputs and generates witnesses, while the circuit performs the ZK-provable computation.

Controller (processes JSON inputs and generates witnesses):

#![allow(unused)] fn main() { pub fn get_witnesses(args: Value) -> anyhow::Result<Vec<Witness>> { let (signature, from, to, value) = parse_transfer_args(args); // Verify signature off-chain and prepare witness data signature.verify(&from)?; let witness_data = TransferWitness { from, to, value, initial_state: get_current_state(), }; Ok(vec![Witness::Data(witness_data.encode())]) } }

Circuit (performs ZK computation):

#![allow(unused)] fn main() { pub fn circuit(witnesses: Vec<Witness>) -> Vec<u8> { let witness_data = TransferWitness::decode(witnesses[0].as_data().unwrap()); let mut state = witness_data.initial_state; // Perform trusted transfer (signature already verified in controller) state.transfer_trusted(witness_data.from, witness_data.to, witness_data.value); // Return state commitment for on-chain verification state.commitment().encode() } }

Note: The above examples show the conceptual structure for state encoding. The current template implementation uses simpler examples (like incrementing a counter), as the full state encoding mechanisms are still in development.

On-Chain Verification

When the target chain receives the proof and circuit output, it can verify execution correctness:

#![allow(unused)] fn main() { fn verify(&self, proof: Proof, circuit_output: Vec<u8>) { let current_commitment = self.state.commitment(); // Extract the new state commitment from circuit output let new_commitment = StateCommitment::decode(circuit_output); // Verify the ZK proof proof.verify(&self.vk, &[current_commitment, new_commitment].concat()); // Apply the proven state transition self.state.apply_commitment(new_commitment); } }

Merkleized Encoder

For cross-chain applications, the Merkleized Encoder handles state transition dependencies across multiple domains. This enables parallel execution while maintaining correctness for chains that depend on each other's state.

Cross-Chain State Dependencies

Consider three chains where:

- Chain 1 executes independently

- Chain 2 executes independently

- Chain 3 depends on the result from Chain 1

The Merklelized Encoder creates a Merkle tree structure:

R (Root)

/ \

M1 M2

/ \ / \

C1 C2 C3 0

| | |

Chain1 Chain2 Chain3

Each leaf contains the encoded state transition for its respective chain:

C1:(S1 → T1), K1(Chain 1 transition)C2:(S2 → T2), K2(Chain 2 transition)C3:(S3 → T3), K3(Chain 3 transition, depends on T1)

Parallel and Sequential Execution

The ZK coprocessor can execute proofs in parallel where possible:

- Independent execution: Chain 1 and Chain 2 can execute in parallel

- Sequential dependency: Chain 3 waits for Chain 1's result

T1 - State sharing: Chain 3 receives

T1and validates the foreign state while processing

Optimized Verification

The Merkle tree structure provides logarithmic verification efficiency. Each chain only needs:

- Its own state transition arguments

- The Merkle path to the root

R - Any dependent state from other chains

For example, Chain 2 only needs C1 and M2 for its Merkle proof, not the complete state data from Chains 1 and 3.

On-Chain Proof Distribution

Each chain receives the minimal data needed for verification:

- Chain 1:

(R1, T1) - Chain 2:

(R2, T2) - Chain 3:

(R3, T3, R1, T1, C2)

Chain 3's verification process includes:

- Verify its own transition:

verify(R3, T3) - Verify the dependency:

verify(R1, T1) - Query the foreign state:

query(T1) - Reconstruct the commitments and validate the Merkle root

This architecture enables the Valence Coprocessor to securely and efficiently coordinate complex cross-chain programs.

Domain Implementations (Examples)

Domains are pluggable modules that supply controller logic and circuits for chain‑specific state proofs. Each implementation typically includes:

- A controller (Wasm) that knows how to fetch/structure state inputs

- A circuit (zkVM target) that verifies the state proof and binds it to the Coprocessor root

- Optional services (e.g., light clients)

Example: Ethereum (as one implementation)

- Build storage layouts with a builder (e.g., mapping indices, combined slots, variable‑length values)

- Create

StateProofArgsfor the target account/storage and optional payload - Produce a

StateProofwitness that the Coprocessor can open to the historical root and verify

New domains can follow the same pattern: define controller APIs that emit domain‑specific Witness::StateProof entries, implement a circuit that verifies those proofs, and optionally provide a service component for light‑client or state synthesis. For how these proofs bind to the Coprocessor root via domain and historical openings, see Domain Proofs.

Domain Proofs

This document explains how domain proofs are modeled and validated in the Valence Coprocessor and how historical proofs are bound to a domain proof.

Domain proofs bind chain‑specific state (for example, an Ethereum account/storage proof) to a single Coprocessor root. The Coprocessor root is the root of a global Historical tree. Each leaf of that tree is a domain root, and each domain root is the root of a per‑domain sparse Merkle tree that maps block number to state root. Guest programs provide domain‑specific state proofs; the Coprocessor augments them with openings up to the Coprocessor root and proves the combined statement. For API access and client conventions, see Coprocessor Internals and for on‑chain consumption, see On‑Chain Integration.

Structure